EU and AI

Artificial intelligence (AI) can help find solutions to many of society’s problems. This can only be achieved if the technology is of high quality, and developed and used in ways that earns peoples’ trust. Therefore, an EU strategic framework based on EU values will give citizens the confidence to accept AI-based solutions, while encouraging businesses to develop and deploy them.

This is why the European Commission has proposed a set of actions to boost excellence in AI, and rules to ensure that the technology is trustworthy.

The Regulation on a European Approach for Artificial Intelligence and the update of the Coordinated Plan on AI will guarantee the safety and fundamental rights of people and businesses, while strengthening investment and innovation across EU countries.

Building trust through the first-ever legal framework on AI

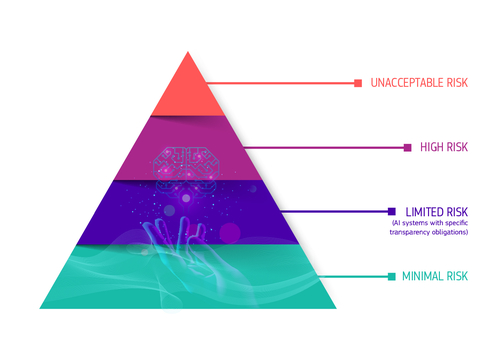

The Commission is proposing new rules to make sure that AI systems used in the EU are safe, transparent, ethical, unbiased and under human control. Therefore they are categorised by risk:

Unacceptable: Anything considered a clear threat to EU citizens will be banned: from social scoring by governments to toys using voice assistance that encourages dangerous behaviour of children.

High risk:

- Critical infrastructures (e.g. transport), that could put the life and health of citizens at risk

- Educational or vocational training, that may determine the access to education and professional course of someone’s life (e.g. scoring of exams)

- Safety components of products (e.g. AI application in robot-assisted surgery)

- Employment, workers management and access to self-employment (e.g. CV sorting software for recruitment procedures)

- Essential private and public services (e.g. credit scoring denying citizens opportunity to obtain a loan)

- Law enforcement that may interfere with people’s fundamental rights (e.g. evaluation of the reliability of evidence)

- Migration, asylum and border control management (e.g. verification of authenticity of travel documents)

- Administration of justice and democratic processes (e.g. applying the law to a concrete set of facts)

They will all be carefully assessed before being put on the market and throughout their lifecycle.

Limited risk: AI systems such as chatbots are subject to minimal transparency obligations, intended to allow those interacting with the content to make informed decisions. The user can then decide to continue or step back from using the application.

Minimal risk: Free use of applications such as AI-enabled video games or spam filters. The vast majority of AI systems falls into this category where the new rules do not intervene as these systems represent only minimal or no risk for citizen’s rights or safety.

Source: EU and AI